Members of the European Parliament have voted overwhelmingly to approve a new world-leading set of rules on artificial intelligence.

They are designed to make sure humans stay in control of the technology – and that it benefits the human race.

So what’s changing?

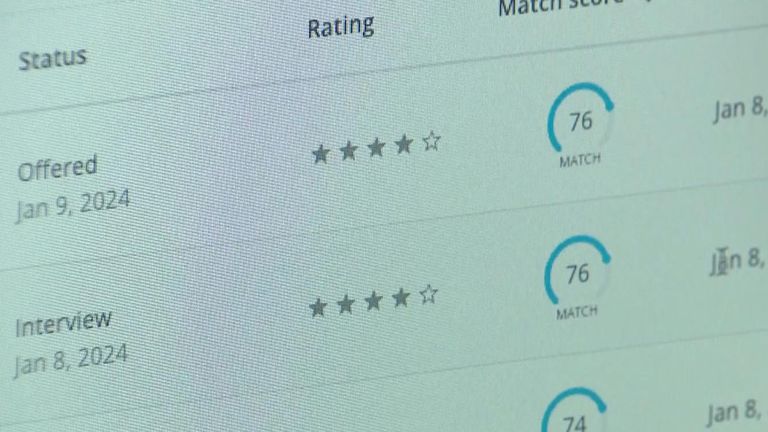

The rules are risk-based. The riskier the effects of an artificial intelligence (AI) system, the more scrutiny it faces. For example, a system that makes recommendations to users would be counted as low-risk, while an AI-powered medical device would be high-risk.

The EU expects most AI applications to be low-risk, and different types of activities have been given groupings to make sure the laws stay relevant long into the future.

If a new technology intends to use AI for policing, for example, it will need more scrutiny.

In almost all cases, companies will have to make it obvious when the technology has been used.

Higher-risk companies will have to provide clear information to users, and keep high-quality data on their product.

The Artificial Intelligence Act bans applications that are deemed “too risky”. Those include the police using AI-powered technology to identify people – although in very serious cases, this could be allowed.

Some types of predictive policing, in which AI is used to predict future crimes, are also banned and systems that track the emotions of students at schools or employees at their workplaces won’t be allowed.

Deepfakes – pictures, video or audio of existing people, places or events – must be labelled to avoid disinformation spreading.

Companies that develop AI for general use, like Google or OpenAI, will have to follow EU copyright law when it comes to training their systems. They will also have to provide detailed summaries of the information they have fed into their models.

The most powerful AI models, like ChatGPT 4 and Google’s Gemini, will face extra scrutiny. The EU says it is worried these powerful AI systems could “cause serious accidents or be misused for far-reaching cyber attacks”.

OpenAI and Meta recently identified groups affiliated with Russia, China, Iran and North Korea using their systems.

Will any of this impact the UK?

In a word, yes. The Artificial Intelligence Act is groundbreaking and governments are looking at it closely for inspiration.

“It is being called the Brussels effect,” says Bruna de Castro e Silva, an AI governance specialist at Saidot. “Other jurisdictions look to what is being done in the European Union.

“They are following the legislation process, all the guidelines, frameworks, ethical principles. And it has already been replicated in other countries. The risk-based approach is already being suggested in other jurisdictions.”

The UK has AI guidelines – but they are not legally binding.

In November, at the global AI Safety Summit in London, AI developers agreed to work with governments to test new models before they are released, in an attempt to help manage the risks of the technology before it reaches the public.

Prime Minister Rishi Sunak also announced Britain would set up the world’s first AI safety institute.

Read more from Sky News:

Woman ‘chats’ to dead mother using AI

Love Island star says ‘cyber flashers’ bombard her

NASA’s SpaceX crew leave treats behind on ISS

It is not just governments who are watching the EU’s new laws closely. The tech industry has lobbied hard to make sure the rules work in their favour, and many are adopting similar rules.

Meta, who owns Facebook, Instagram and WhatsApp, requires AI-modified images to be labelled, as does X.

On Tuesday, Google restricted its Gemini chatbot from talking about elections in countries voting this year, to reduce the risk of spreading disinformation.

Ms Castro e Silva says it would be smart for companies to adopt these rules into the way they work internationally, instead of just in the EU.

“They wouldn’t have different ways of working [around the world], different standards to work to, different compliance mechanisms internally.

“The whole company would have a similar way of thinking about AI governance and responsible AI inside their organisation.”

Although the industry generally supports better regulation of AI, OpenAI’s chief executive Sam Altman raised eyebrows last year when he suggested the ChatGPT maker could pull out of Europe if it cannot comply with the AI Act.

He soon backtracked to say there were no plans to leave.

The rules will start coming into force in May 2025.